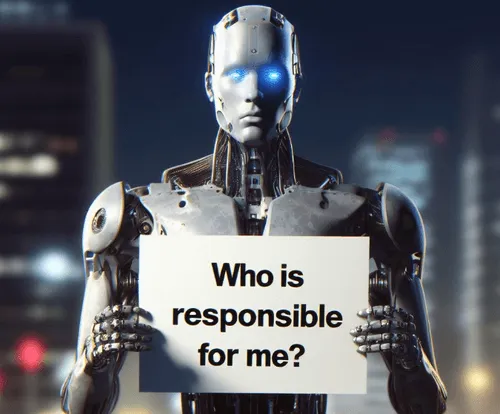

We live in an incentive-based system. Organisations are set up with certain roles and responsibilities to their stakeholders and they are incentivised by those stakeholders to perform.

The media has a role to provide attention grabbing news in order to make profit for shareholders and we shouldn’t blame them for that. But we would hope that that they do that responsibly, with honesty, integrity and without twisting the AI narrative.

The government has a role to regulate industries for the good of society, but good regulation takes time and can be slow moving and we shouldn’t blame them for that. But we would hope that responding to the immediate and future concerns of AI is done with priority and proper consultation.

Technology companies have a role to innovate and provide products that push the boundaries and solve problems, but innovation by nature is risky and so we shouldn’t blame them for taking risks. But we would hope that with AI those risks are considered and mitigated adequately.

Educators have a role to help students achieve good results, but that is short-termist and sometimes we forget that a child needs more than good results to succeed in life. We should not blame educators for that, but we would hope that they do consider the mental, emotional and physical well being of the child when introducing AI in the classroom.

AI is coming at speed with immense power and will turn many of our lives upside down. It is like social media on steroids. If we knew then what we know now about social media and its impacts on young people and wider society, we would have, should have, done more.

It is imperative that we come together and all work as one, with our combined voices and not point fingers.

We are not kids fighting in the playground, we are a community coming together seeking a better life.