The below prompt was used to generate high- and low-quality answers for a Year 9 Physics class learning about terminal velocity. This topic is typically academically challenging for Y9 pupils, as it requires a multi-step explanation as well as spatial and logical reasoning.

“You are an expert Physics teacher with an in-depth knowledge of pedagogical techniques and decades of experience in helping pupils learn. Your Y9 class are studying Edexcel IGCSE Physics and have just finished learning about terminal velocity and are now about to answer the following question: “Explain how a skydiver reaches terminal velocity”. Write two answers to this question. One should be an exemplar answer achieving full marks. The other should contain several mistakes and misconceptions receiving very few marks. Limit each answer to 150 words. Explain why the great answer is great and why the bad answer is wrong. Ask me some clarification questions first and then produce the answers.”

The prompt was input into ChatGPT, whose outputs were then improved with further prompting (mostly to increase the length of the initial answers) and using the teacher’s own subject and contextual knowledge of this topic in the scheme of work (see outputs below). The process was relatively quick (approx.20 minutes from start to finish).

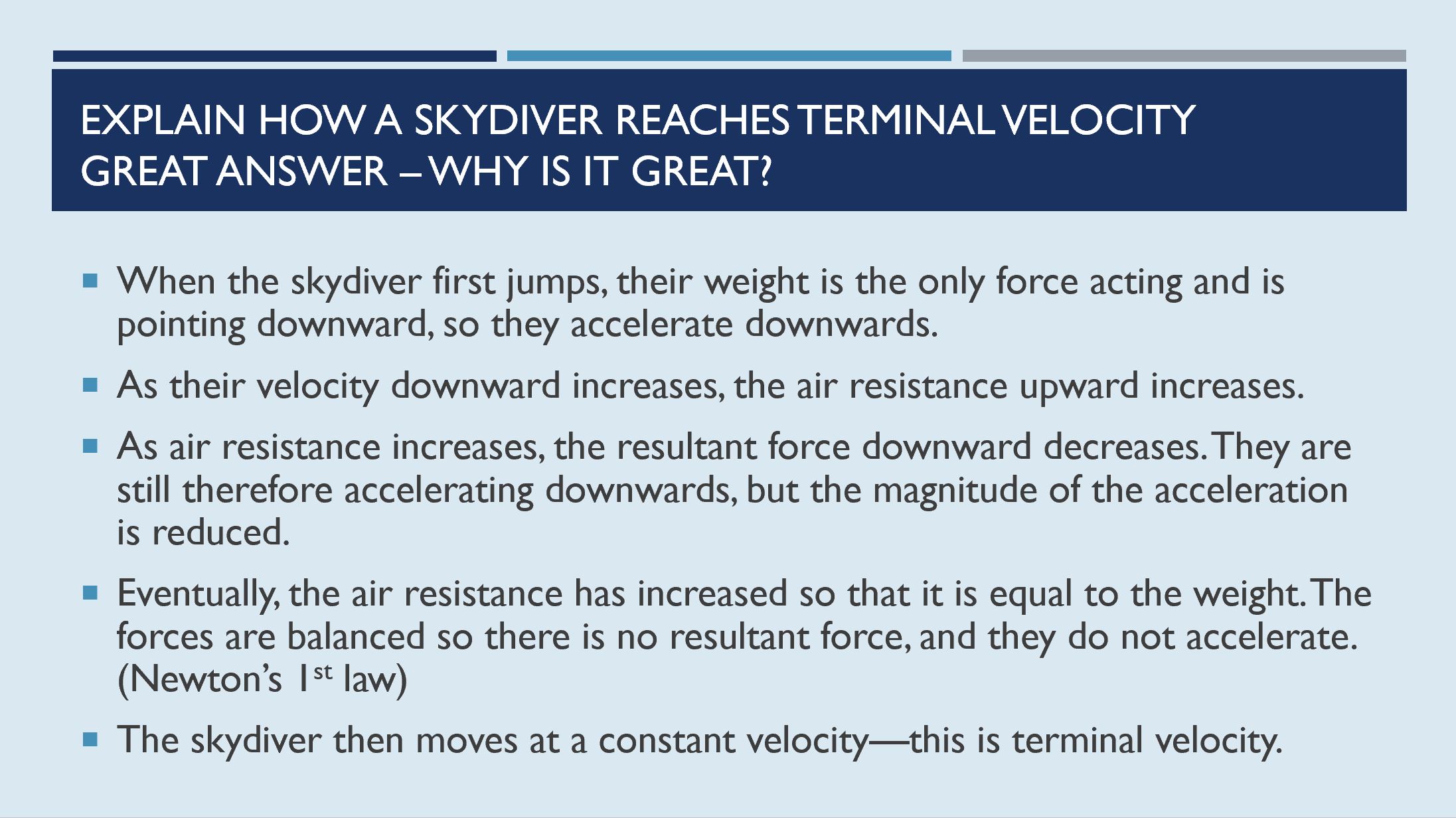

Having first been taught the topic using more traditional methods, pupils were then given a printed version of the high-quality answer which they evaluated in groups of three. This promoted a useful discussion about logical structuring,naming the forces involved and the importance of giving their directions.

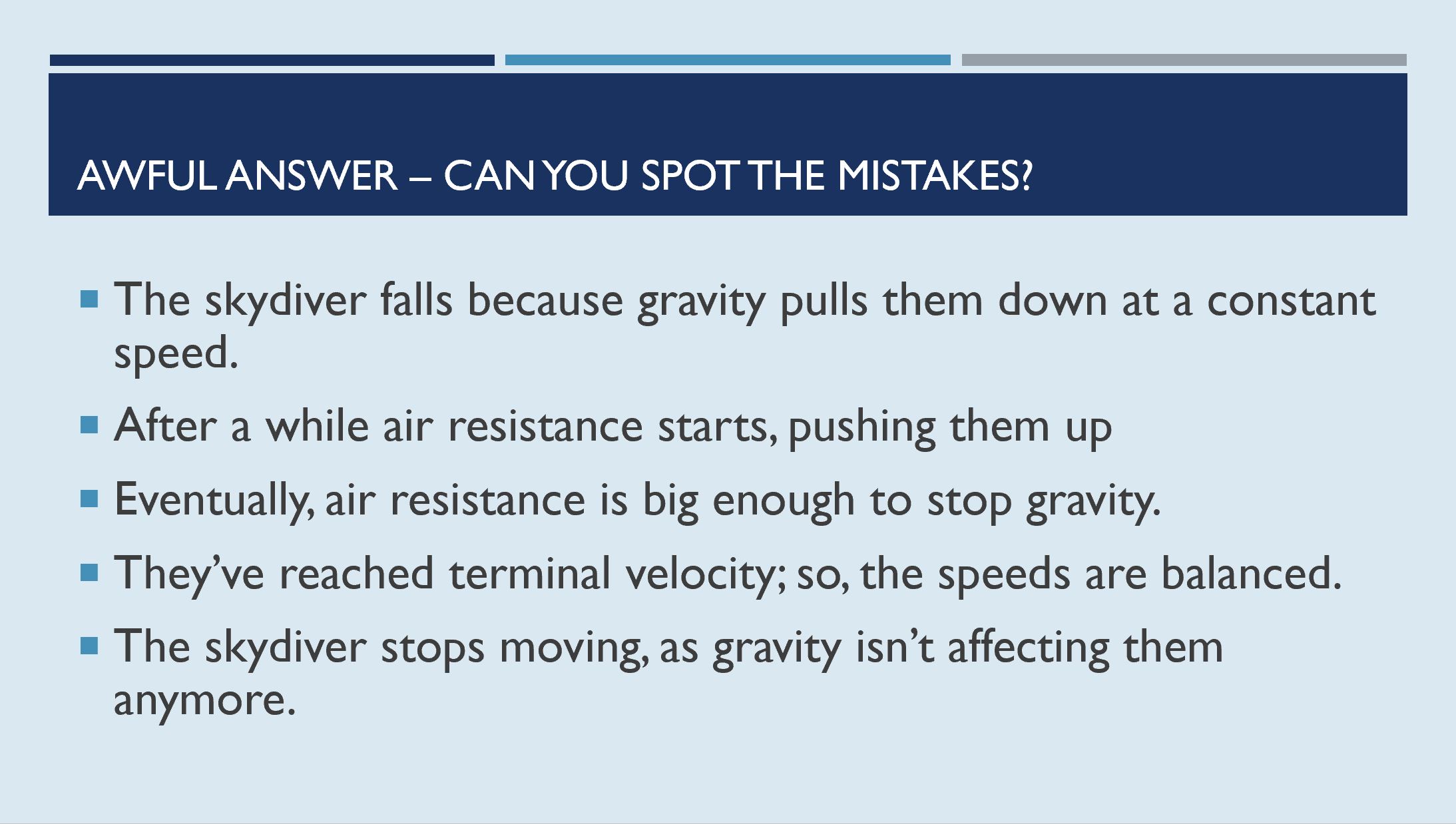

Pupils were then given the low-quality answer and, again in their groups, had to discuss and find the common misconceptions. In this topic, where there are lots of common mistakes, this was an incredibly useful exercise in highlighting to pupils what sort of errors they might make and the importance of relevant detail in their answers.

This task was then followed up with independently completed exam questions on this topic to offer pupils further opportunities to deploy their understanding and embed this learning. The pupils scored well on a similar question in their end of topic test.

The scenario in this case study is genuine and based upon real events and data, however its narration has been crafted by AI to uphold a standardised and clear format for readers.

Key Learning

This was a beneficial exercise that I feel improved the quality of my teaching and pupils’ understanding of this topic. Pupils' written answers following this task were notably well structured and avoided common misconceptions. In previous years, I would not have included a task like this in my lessons. To write my own high- and low-quality answers would be overly time consuming, as would collecting previous pupils’ work to be stored for future years.

Teacher input remains crucial so that the output from the AI can be adapted to retain its relevance to the exam board and educational context of the class. I feel the outputs could be improved further by also inputting a mark scheme to the initial prompt, to give the LLM a clearer context of what a model answer would include.

Pupils knowing the work was AI generated revealed a hidden benefit – they were far more willing to honestly critique and evaluate the work of AI than they would be a peer, leading to more open and frank reflection on the answers during the discussion task. I will be continuing to use this prompt to guide pupils through the writing of longer written questions.

Generic version of the prompt you can adapt: You are an expert <subject> teacher with an in-depth knowledge of pedagogical techniques and decades of experience in helping pupils learn. Your Y<x>class has just finished learning about <topic> and are now about to answer the following question: <>. Write two answers to this question.One should be an exemplar answer achieving full marks. The other should contain several mistakes and misconceptions receiving very few marks. Limit each answer to <x> words. Explain why the great answer is great and why the poor answer is wrong. Ask me some clarification questions first and then produce the answers.

Risks

The answers produced need to be thoroughly reviewed and adapted by the teacher to ensure they are in the desired style and are relevant to the class’s context.

Providing both the high- and low-quality answers simultaneously can introduce a large cognitive load on pupils and risk them conflating the two.

By giving pupils a high-quality answer ready to go, there is a risk that they will be less able to evaluate ideas and produce similar answers independently in new scenarios.